Beyond the Bucket : Design Decisions That Power AWS S3

How smart Engineering principles and architectural patterns built the cloud storage's silent giant

Take a moment to consider this: by the time you finish reading just this sentence, S3 will have processed hundreds of millions of requests, effortlessly moving data at several hundred Terabits per second. And by now, it would have processed a few more millions, or probably around a billion requests. For any engineer fascinated by large-scale systems, S3's sheer operational dexterity is nothing short of captivating.

This isn't merely about how S3 is storing the files. From serving up your favourite media — images, videos, and music — to reliably holding the logs that fuel massive analytics platforms and the data that drives distributed applications, S3 is the foundational engine enabling scalable and durable storage across the globe.

Today, S3 is home to a staggering 400 trillion objects, spread across 31 regions and 99 availability zones worldwide. This vast geographical footprint isn't just for show; it's the very core of how S3 achieves its commendable availability and 99.999999999% durability. Data isn't simply stored; it's meticulously replicated across multiple facilities within each region, allowing the system to flawlessly navigate potential infrastructure hiccups — be it an operator's rare misstep, a failing hard drive, or even an entire facility going offline — without missing a beat.

Let's put the operational prowess of S3 into perspective:

Throughput: It reliably handles data at hundreds of terabits per second.

Peak Performance: Its front-end web server fleet routinely processes around 1 PB/s bandwidth at peak.

Indexing at Scale: An intelligent index designed to effortlessly manage 100 million requests per second.

Global Reach: Securing 400 trillion objects across its expansive global network.

Durability: Achieving 99.999999999% durability, which means the chance of losing an object is statistically negligible.

Replication: Data replication across multiple facilities ensures continuous service, shrugging off various infrastructure failures.

In this article, we'll peel back the layers to reveal the ingenious design principles, powerful patterns, and critical architectural decisions made by the AWS S3 team. These are the integral pieces that transformed S3 into the indispensable, robust, and truly captivating cloud storage powerhouse it is today.

Multi-Part Uploads (MPUs)

A Throughput Multiplier

Uploading large objects as a single unit can create bottlenecks. AWS S3's "Multi-Part Upload" feature elegantly solves this, a simple yet profoundly impactful design decision.

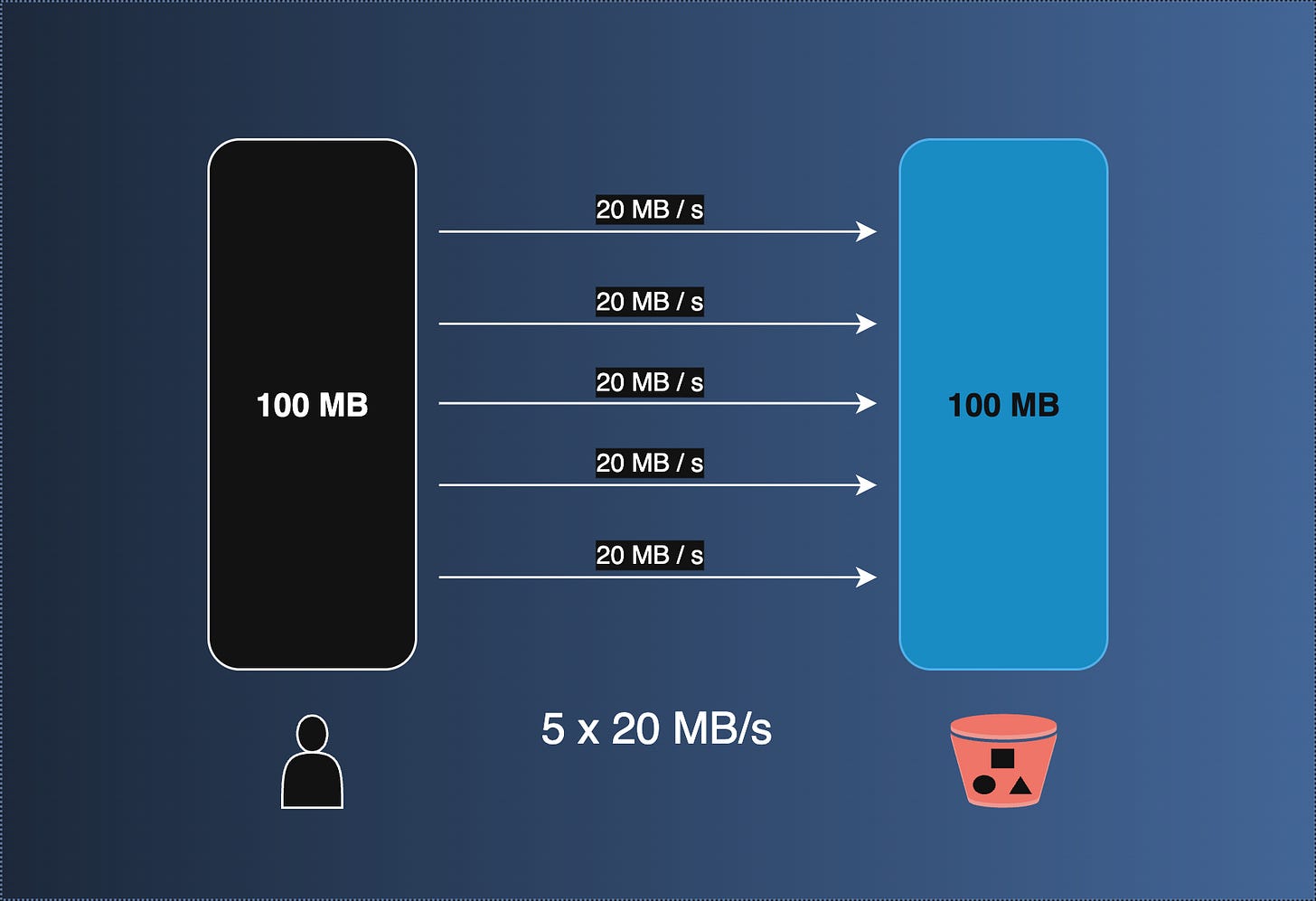

Instead of a monolithic upload, a large object (e.g., 100MB) is broken into smaller chunks (e.g., five 20MB parts). By doing so, they achieved:

Parallel Processing: Each chunk can be uploaded in parallel.

Throughput Boost: This parallelism directly multiplies upload speed, leveraging multiple concurrent connections.

Enhanced Resilience: If one part fails, only that specific chunk needs re-transmission, not the entire object.

This design significantly improves the reliability and speed of large file transfers, making it crucial for everything from massive data archives to high-resolution media.

Range Gets

Parallel Downloads for Enhanced Retrieval

Following a similar philosophy to multi-part uploads, S3's "Range Get" capability optimizes the download experience for large objects. Instead of fetching an entire large file in one go, a client can request specific byte ranges of that object.

This translates into several advantages:

Parallel Fetching: Multiple range requests can be initiated in parallel. For instance, a 100MB object can be fetched as five 20MB segments concurrently.

Throughput Acceleration: Just like multi-part uploads, this parallel fetching significantly boosts download speeds, maximizing network utilization.

Efficient Resumption: If a download is interrupted, only the missing ranges need to be requested, rather than restarting the entire download.

Partial Content Access: It enables applications to access only the necessary portions of a large file, useful for tasks like streaming video (where only the currently viewed segment is needed) or processing large datasets in chunks without downloading the whole.

Range Gets are thus crucial for high-performance data retrieval and flexible content access from S3.

Multi-Value DNS

Spreading the Load for True Parallelism

The brilliance of Multi-Part Uploads and Range Gets – splitting data into smaller chunks for parallel processing – would be significantly undermined if all those chunks were routed to a single S3 web server. This is where Multi-Value DNS steps in as a critical architectural component.

Here's how it enhances S3's performance and resilience:

Distributed Processing: When a client resolves an S3 endpoint using DNS, Multi-Value DNS returns multiple IP addresses for different web servers in the S3 fleet.

Workload Distribution: This allows the client to distribute requests for different object chunks across these various web servers. Instead of one server handling five concurrent 20MB requests, five different servers each handle one 20MB request.

Offloading Individual Servers: This strategy effectively offloads individual web servers, preventing any single server from becoming a bottleneck due to its inherent limits on CPU, memory, or I/O capacity. Imagine distributing five heavy backpacks among five friends, rather than one person struggling with all of them.

Workload Decorrelation: A less obvious but equally vital benefit of Multi-Value DNS is "workload decorrelation." This concept, which we'll explore further soon, ensures that potential issues affecting one server or a small group of servers don't cascade and impact a large portion of client requests.

By leveraging Multi-Value DNS, S3 ensures that the benefits of parallel object processing are fully realized, distributing the load across its massive fleet of front-end servers for optimal performance and fault tolerance.

Chain of Custody

Guaranteeing Data Integrity

At the heart of S3's legendary durability is an exhaustive "Chain of Custody" process, driven by continuous checksumming. This robust system ensures data integrity from the moment an object is ingested until it's safely stored and continuously accessible.

Here's how this critical process works:

End-to-End Verification: S3 employs a comprehensive checksumming process throughout an object's lifecycle. It supports highly performant algorithms like CRC, CRC32, and CRC32C.

Multi-Level Checksums on Ingestion: When a storage request arrives, checksums are calculated at multiple points along the data path to storage.

Crucial "Reverse Transformation": Before acknowledging a successful write, S3 performs a vital "reverse transformation." It re-derives the data from its stored components and recalculates its checksum. This verifies that what was received is precisely what was stored.

Dual-Flow Validation: For every storage request, S3 calculates checksums during the forward flow (data coming in) and then meticulously rechecks them during the backward flow (data confirmation). Only if all validations pass is a 200 OK (success) response returned to the user.

Continuous Integrity at Scale: This rigorous process enables S3 to process billions of checksums per second across its entire storage fleet. This continuous vigilance allows for immediate detection of any bit flips or data corruption at rest, triggering automatic recovery actions.

This "Chain of Custody" is a fundamental reason S3 can confidently boast its remarkable durability, ensuring the integrity of your data against silent corruption.

Erasure Coding (EC)

The Foundation of S3's Unmatched Durability

S3's almost mythical durability (those eleven nines!) isn't magic; it's meticulously engineered, largely thanks to Erasure Coding (EC). This isn't just basic data copying; it is a far more efficient and resilient way to protect your data.

Here's how it works:

Smart Data Breaking: Your data isn't stored as one big blob. Instead, it's:

Broken into several "data shards" or chunks.

Then, "parity shards" are cleverly generated from these data pieces using advanced algorithms.

Global Distribution, Not Just Local: These shards aren't just spread across different drives in one spot. They are critically distributed:

Across multiple, distinct storage devices within a data center.

Crucially, they span multiple Availability Zones (AZs), and sometimes even regions.

Rebuilding from Chaos: This widespread distribution is incredibly powerful. Even if multiple drives, or even entire facilities, go offline, S3 can flawlessly reconstruct your lost data from the remaining healthy shards.

AZ-Proofing: This strategy is so robust that standard S3 storage classes can withstand the complete loss of an entire Availability Zone without any impact on data integrity. Your data simply persists.

S3 Express One Zone – A Calculated Choice: For the ultra-fast S3 Express One Zone, there's a specific design trade-off. Data lives in a single AZ for peak performance. This means, unlike other classes, a full or partial AZ failure could lead to data loss, prioritizing speed above all else.

Lightning-Fast Repairs: S3 maintains "slack space" across its fleet, allowing for immediate, parallelized data recovery when a drive fails. This dramatically shrinks the window where data is vulnerable, boosting overall system resilience.

Erasure Coding, with its intelligent distribution and rapid recovery, is the bedrock allowing S3 to deliver its industry-leading durability guarantees.

We are just scratching the surface of EC in this article. I will soon be writing a detailed article about Erasure Coding, where we would be diving deeper on the algorithm and other specifics.

Note:

S3 Express One Zone: For the ultra-fast S3 Express One Zone, there's a specific design trade-off. Data lives in a single AZ for peak performance. This means, unlike other classes, a full or partial AZ failure could lead to data loss, prioritizing speed above all else.

Shuffle Sharding

The Secret to S3's Elasticity

S3's "elastic" nature, allowing any workload to burst and leverage the entire storage fleet, is fundamentally achieved through Shuffle Sharding. This powerful design concept is central to both data distribution and access.

Here's how it works and its profound implications:

Dynamic Resource Spreading: When you upload a resource into a S3 bucket, it is broken into shards and dynamically spread across a randomly chosen subset of drives from the S3 fleet. The next time a resource is uploaded to the same bucket, it would be sent to a different set of drives. Storage locations are not static.

Overcoming Physical Limitations: This ensures your resources are spread across more disks than immediately needed, proactively addressing throughput bottlenecks inherent to individual drives.

Workload Decorrelation: AWS calls this intentional workload decorrelation. By randomly distributing data and requests, S3 ensures:

No Hotspots: Workloads are inherently balanced.

Enhanced Resilience: Issues on one node affect only a small, uncorrelated fraction of the total workload.

Dynamic DNS Resolution & Fault Tolerance: When fetching data, requests are often routed to a different set of node servers each time. If a request fails due to a server issue, a retry is highly likely to succeed as it will be directed to a different, healthy set of servers. Your buckets are mapped dynamically, not statically.

Through Shuffle Sharding, S3 achieves remarkable elasticity, performance, and fault tolerance against individual component failures.

Common Runtime (CRT)

Empowering S3's Advanced Features

The true power of S3's sophisticated architecture is made accessible to developers through the AWS Common Runtime (CRT). This low-level, open-source component library, integrated into the AWS SDK, allows engineers worldwide to leverage S3's advanced capabilities without managing their underlying complexity.

The CRT automates and optimizes interactions with S3, enabling features like automated Multi-Part Uploads, parallelized Range Gets, "smart" retries with Shuffle Sharding, etc.,

A particularly impactful feature of the CRT is its intelligent retry mechanism. It continuously monitors the 95th percentile (P95) of request latencies. If a request's latency exceeds this P95 threshold, the CRT:

Intentionally Cancels the lagging request.

Retries it, crucially leveraging Shuffle Sharding. This means the new attempt is routed to a different set of front-end servers, significantly increasing the probability of faster processing due to workload decorrelation.

This active management of tail latencies by the CRT pulls in overall performance, showcasing how S3's various design patterns synergistically deliver a superior experience.

This integration of client-side intelligence with server-side architecture paints a comprehensive picture of S3's design. However, a key question remains: how exactly does Shuffle Sharding identify the optimal disks to ensure heat is evenly spread across the massive storage fleet?

The answer lies in the power of "randomness"—more specifically, the strategy of "Two Random Choices."

Power of Randomness

The "Two Random Choices" Advantage

Consider the challenge:

The Scale Problem: With hundreds of millions of writes per second across an immense number of drives, a brute-force search for the "least occupied" drive is computationally impossible. Similarly, maintaining a real-time, optimized data structure like a priority queue for so many drives is impractical at this scale.

Simple Randomness is Insufficient: While simply picking one random drive is better than a full scan, pure mathematical randomness, over time, tends to lead to uneven distributions and "hotspots."

This is where the genius of "Two Random Choices" comes in:

The Clever Tweak: For any given write request, S3 doesn't just pick one random drive. Instead, it randomly selects two distinct drives from its vast fleet.

The "Best" Choice: It then compares the load or occupancy of these two chosen drives and places the data shard into the least occupied of the pair.

This seemingly small algorithmic tweak yields dramatic improvements in storage efficiency and load distribution. By simply having the option to choose between two random contenders, S3 achieves a significantly more even spread of workload across its fleet, ensuring no single drive becomes a bottleneck and optimizing the utilization of its massive storage infrastructure. It's a prime example of how a simple, yet mathematically powerful, concept can solve complex problems at extreme scale.

Onboarding New Disks in the S3 Fleet

The Art of Integration

Okay, so everything works just fine with the setup we have so far. But wait! What happens when new drives are added to the immense S3 fleet? It's not as simple as a plug-and-play, thanks to the "thermodynamics" of data behavior.

The software running on each S3 storage node essentially operates a powerful log-structured file system. This is what AWS team calls “Shardstore”.

Shardstore is simply too much to handle for this article. I will soon be writing a detailed article on it, where we would be diving deeper on the specifics.

Coming back - the key observation of data behaviour in S3 is:

Data "Temperature":

Hot Data: Younger and smaller objects are typically accessed more frequently.

Cold Data: Older and larger objects tend to be accessed less frequently.

This means a drive holding "hot" data today will naturally become "colder" over time as its data ages. In other words, drives gradually cool down.

AWS leverages this fundamental data behaviour for traffic distribution. Imagine adding a new disk rack to an S3 Availability Zone. If this new disk immediately started serving all fresh, "hot" traffic, it would quickly become a performance bottleneck—it would "melt down."

Instead, S3 employs a strategic process:

Warm-Up Phase: When a new disk joins the system, it doesn't immediately take on new, hot writes.

Cold Data Migration: Instead, a portion of "cold" objects from existing, busy disks and racks are actively migrated to the new disks, filling them up to approximately 80% capacity.

Gradual Integration: Only once this "cold benchmark" is met does the new rack (and the older ones that offloaded the data) begin to serve new, hot traffic, gradually integrating into the fleet.

This intelligent data migration ensures that new hardware is ramped up smoothly, preventing hotspots and maintaining consistent performance across the entire fleet. It also means that data objects aren't static; they are frequently "moved" around among S3's vast number of drives and racks as part of this continuous rebalancing and optimization.

The Repair Fleet

Countering Inevitable Failures

Hard drives, being mechanical, electrical, and electronic devices, are inherently prone to failure. In a system as vast as S3, drive failures are not an exception but a constant reality. Consequently, S3 maintains a robust data repair mechanism for detected failures.

The challenge intensifies with large-scale failures, such as a power outage impacting an entire rack or, in extreme scenarios, a whole Availability Zone. To address this, S3 needed its repair rate to consistently match or exceed its failure rate, crucial for maintaining its 11-nines durability goal.

Their solution involves:

Automated Feedback Loop: S3 implemented an automated feedback loop.

Failure Detectors: This loop uses sophisticated failure detectors that stay on top of all the failures occurring across the fleet.

Dynamic Scaling: The detectors also inform and enable the repair fleet to dynamically scale up or down its operations.

This continuous, adaptive scaling ensures S3 can rapidly recover from widespread failures, upholding its high durability standards even under extreme conditions.

Intelligent-Tiering

A smart companion in Cost Optimization

While not strictly a core architectural design pattern like those discussed, S3 Intelligent-Tiering represents an interesting and highly practical innovation in S3's offering. It is designed to optimize storage costs for data with unpredictable access patterns.

S3 provides a range of storage classes, each tailored for different access frequencies and cost profiles:

S3 Standard: General-purpose storage for frequently accessed data.

S3 Standard-IA: Infrequent Access – for data accessed less often but requiring rapid retrieval.

S3 One Zone-IA: Infrequent Access, single AZ – for data that needs infrequent access but can tolerate zonal loss.

S3 Glacier Instant Retrieval: Archival data, instant retrieval – for long-lived archives needing immediate access.

S3 Glacier Flexible Retrieval: Archival data, flexible retrieval options – for archives with retrieval times from minutes to hours.

S3 Glacier Deep Archive: Lowest cost archival data, longest retrieval times – for long-term data retention at minimal cost.

S3 Intelligent-Tiering removes the guesswork. When you select this storage class, S3 automatically monitors your data's access patterns and moves it between tiers to optimize costs, without performance impact. For example:

If data in the S3 Standard (Frequent Access) tier remains unused for 90 days, it's automatically moved to a lower-cost Archive Access tier.

If it remains cold for over 180 days, it moves to the even more cost-effective Deep Archive Access tier.

Crucially, if the data is accessed at any point, it's automatically returned to the S3 Standard (Frequent Access) tier.

This dynamic, automated tiering offers users significant cost savings and flexibility, ensuring they pay only for the storage access they truly need.

This article has merely scratched the surface of the sophisticated engineering behind AWS S3. While we've unveiled some of the brilliant design patterns and architectural decisions that empower its incredible scale, durability, and performance, there's always a deeper layer to explore. If these insights have ignited your curiosity and you're eager to delve further into the distributed systems magic that makes S3 tick, let us know! Your interest guides our next deep dive.